Introducing LLMs

Large language models (LLMs) are a type of artificial intelligence (AI) that are trained on massive amounts of text data. This allows them to understand and generate human-quality language. LLMs are still under development, but they have already been shown to be effective in a variety of tasks, including translation, summarisation, and question-answering.

How LLMs Work

LLMs work by using a technique called deep learning. Deep learning is a type of machine learning particularly well-suited for tasks such as natural language processing (NLP). In deep learning, a computer model is trained on a massive amount of data. The model is then able to use this data to make predictions about new data.

In the case of LLMs, the data that they are trained on is text. This text can come from a variety of sources, such as books, articles, and websites. As the LLM is trained on more and more data, it can learn the patterns of human language. This allows it to generate text that is grammatically correct and semantically meaningful.

What is Semantic Kernel?

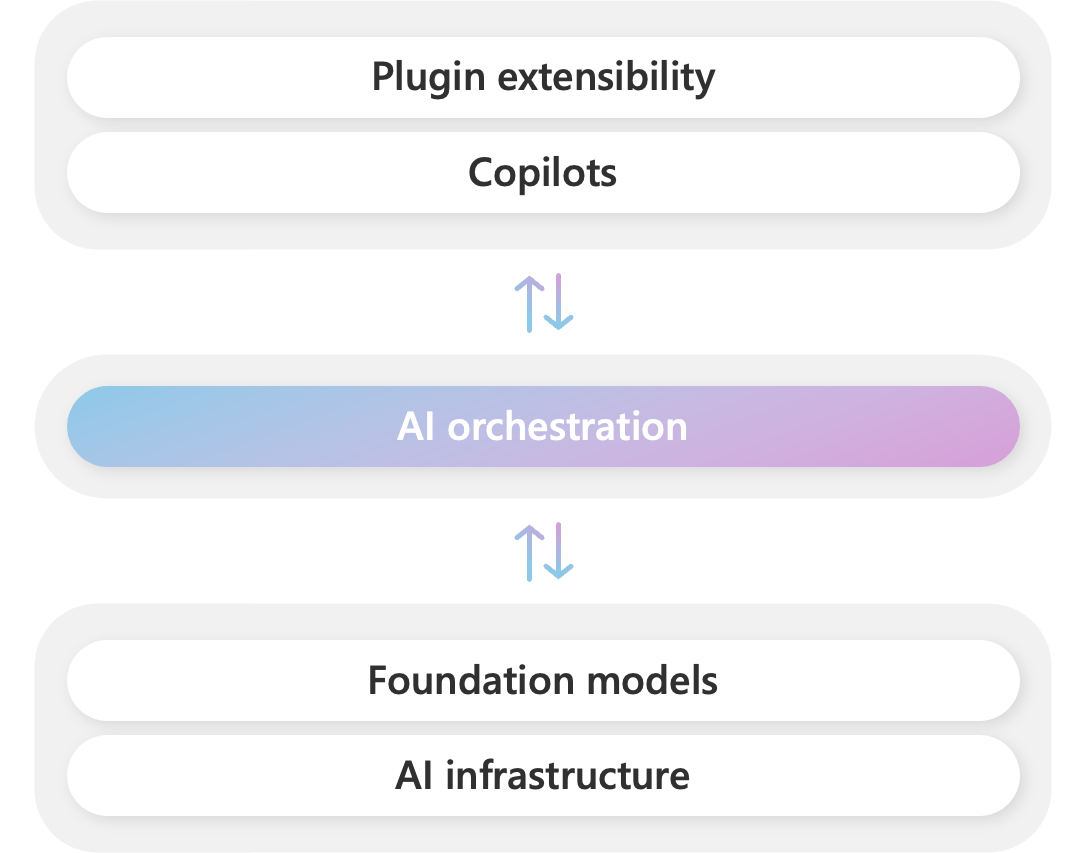

Semantic Kernel is an open-source SDK that empowers developers to seamlessly integrate AI capabilities into their applications. It acts as a bridge between the world of conventional programming languages like C# and Python and the realm of powerful AI services such as OpenAI, Azure OpenAI, and Hugging Face. By leveraging Semantic Kernel, developers can craft AI-powered applications that combine the best of both worlds: the expressiveness and control of traditional programming languages with the cutting-edge capabilities of AI models.

Semantic Kernel's core functionality revolves around the concept of "chains," which are essentially sequences of AI operations that can be connected and executed together. This modular design enables developers to build complex AI pipelines in a structured and maintainable way. Additionally, Semantic Kernel provides a rich ecosystem of connectors and plugins that facilitate the integration of various AI models and services.

Whether you're aiming to develop AI-powered chatbots, automate repetitive tasks, or enhance your applications with natural language processing capabilities, Semantic Kernel stands as a valuable tool that empowers developers to harness the power of AI and create innovative solutions.

Your first steps

Ok.. Let’s take some first steps and create a popular Hello World sample as we make in any programming language. To make this we need a few ingredients.

NuGet packages

Microsoft.SemanticKernel

Microsoft.SemanticKernel.Plugins.Core

At the time of this writing, we are at beta8 next steps are straightforward and taken directly from Microsoft documentation.

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Plugins.Core;

var kernel = Kernel.Builder

.Build();

var time = kernel.ImportFunctions(new TimePlugin());

var result = await kernel.RunAsync(time["Today"]);

Console.WriteLine(result);So this is a quick demo of Hello World time to make something more interesting. Let’s take a simple Azure Functions project which shows us how to invoke chat with the LLM model running in Azure Open AI.

Here we create an instance of the Startup class where we register all required services.

public class Startup : FunctionsStartup

{

public override void Configure(IFunctionsHostBuilder builder)

{

builder.Services.AddScoped(sp => {

var deploymentName = Environment.GetEnvironmentVariable("DeploymentName");

var endpoint = Environment.GetEnvironmentVariable("Endpoint");

var apiKey = Environment.GetEnvironmentVariable("ApiKey");

var kernel = new KernelBuilder()

.WithAzureChatCompletionService(deploymentName: deploymentName, endpoint: endpoint, apiKey: apiKey, alsoAsTextCompletion: true, serviceId: "chat")

.Build();

return kernel;

});

}

}Next, let’s go and create our function code

public class ChatFunction

{

private readonly IKernel _kernel;

private readonly ILogger<ChatFunction> _logger;

private readonly IChatCompletion _chatCompletion;

private readonly ChatHistory _chatHistory;

public ChatFunction(IKernel kernel, ILogger<ChatFunction> logger)

{

_kernel = kernel;

_logger = logger;

_chatCompletion = _kernel.GetService<IChatCompletion>();

_chatHistory = _chatCompletion.CreateNewChat();

}

[FunctionName("ChatFunction")]

public async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = "chats/{chatId:int}/messages")] HttpRequest req,

int chatId)

{

_logger.LogInformation("C# HTTP trigger function processed a request.");

var ask = JsonSerializer.Deserialize<Ask>(await req.ReadAsStringAsync());

_logger.LogDebug($"ChatId: {chatId}");

_logger.LogDebug($"Sender: {ask.sender}");

_logger.LogDebug($"Text: {ask.text}");

var _chatCompletion = _kernel.GetService<IChatCompletion>();

_chatHistory.AddUserMessage(ask.text);

var chatResult = (await _chatCompletion.GetChatCompletionsAsync(_chatHistory))[0];

var chatMessage = await chatResult.GetChatMessageAsync();

_chatHistory.AddAssistantMessage(chatMessage.Content);

_chatHistory.AddMessage(AuthorRole.System, chatMessage.Content);

_logger.LogDebug($"Chat history: {JsonSerializer.Serialize(_chatHistory)}");

var askResult = new AskResult(chatMessage.Role.ToString(), chatMessage.Content, _chatHistory);

return new OkObjectResult(askResult);

}

}This demo shows us that we can quickly build a fully functional chat-style REST API of course we are missing functions like history, context, and so on.

Why do we need Semantic Functions?

Ok let’s go further in Semantic Kernel we have a concept of plugins in other words this kind of grouping of our prompts which we develop plus some lines of custom code.

Semantic Functions give our LLM model the possibility to hear and talk. This is our body and the most valuable part of the Semantic Kernel (SK). For an example let’s take the below sample.x

We have a Chat Plugin that can be composed of several functions:

Chat function

Extract audience function

Extract intention function

Summarize conversation

Why do we need Native Functions?

In many scenarios, there is also a case when we need to call some external API or load something from the database. This is the place where native functions came to our playground.

This is a part of the application that calls external data sources using code written in C#, Python, or Java to load memories for our LLM model or execute actions like maths calculations because LLM is not good at this skill.

The Plan

We execute each part of our code manually on the above code samples, but it is not the most convenient way to do this. We can also leverage help from the LLM to try to find the best combination of plugins and functions of those plugins to achieve specified jobs.

Let’s assume that the user asks: “I want calculate my investment. How much my stocks will be valuable if, in the next 5 years, the stock price rises about 10%? In my portfolio, I have 100 Apple stocks.”

The system will create a plan:

Extract audience - semantic

Extract intention - semantic

Call stocks API - native

Calculate stock price - native

Summarize conversation - semantic

Wrap up

As we wrap up this exploration, Semantic Kernel emerges as a powerful tool for developers seeking to harness the potential of AI within their applications. Whether creating chatbots, automating tasks, or enhancing applications with natural language processing, Semantic Kernel provides a versatile platform for innovation in the AI landscape. Cheers to the exciting possibilities that lie ahead in the realm of Semantic Kernel and AI development! 🚀